An explorer from Quzhou

I am a senior research scientist at NVIDIA Deep Imagination Research Group, where I work on probabilistic modeling of high-dimensional data. Right now, I’m leading our world-model training efforts.

Prior to joining NVIDIA, I was:

- Ph.D. in Robotics from Georgia Institute of Technology IRIM in 2023, advised by Yongxin Chen

- Bachelor’s degree from Shanghai Jiaotong University in 2019, where I taught robots to play soccer and even won a Robo WORLDCUP!

My “generative AI” qualifications:

- I lead NVIDIA’s large-scale diffusion model training. From to ablating models and babysitting training runs. We delivered and open-sourced COSMOS world models and shipped closed-source Picasso in NVIDIA AI Foundations (and, after two years, a tech report finally saw the light).

- I co-authored DEIS, the first to introduce exponential integrators for diffusion sampling—cutting sampling steps down to 10–15.

- I democratized using generative diffusion models for sampling unnormalized probability densities (e.g., amortized molecular conformer generation) in this paper.

In general, my research approach centers on the representation, learning, and sampling of complex probability distributions.

Email: qsh.zh27 [at] gmail [dot] com

news

see all news →

Jul 1, 2025

Cosmos Predict2 release! SOTA world model for AV driving and robots.

Jan 11, 2025

Cosmos Predict1 release! First open source large scale world model

Dec 1, 2023

May 16, 2022

ICML22 Wasserstein gradient flow in pixel space!

Apr 30, 2022

Training Free DEIS 10 steps to generate high-fidelity samples for diffusion model.

Selected publications

See Google Scholar for the full list of publications → Cosmos World Foundation Model Platform for Physical AIWhitepaper. 2025

Cosmos World Foundation Model Platform for Physical AIWhitepaper. 2025🎯 NVIDIA’s open-source video world model platform

Student Mentees and Collaborators

I have been fortunate to work with many talented students and collaborators throughout my research journey

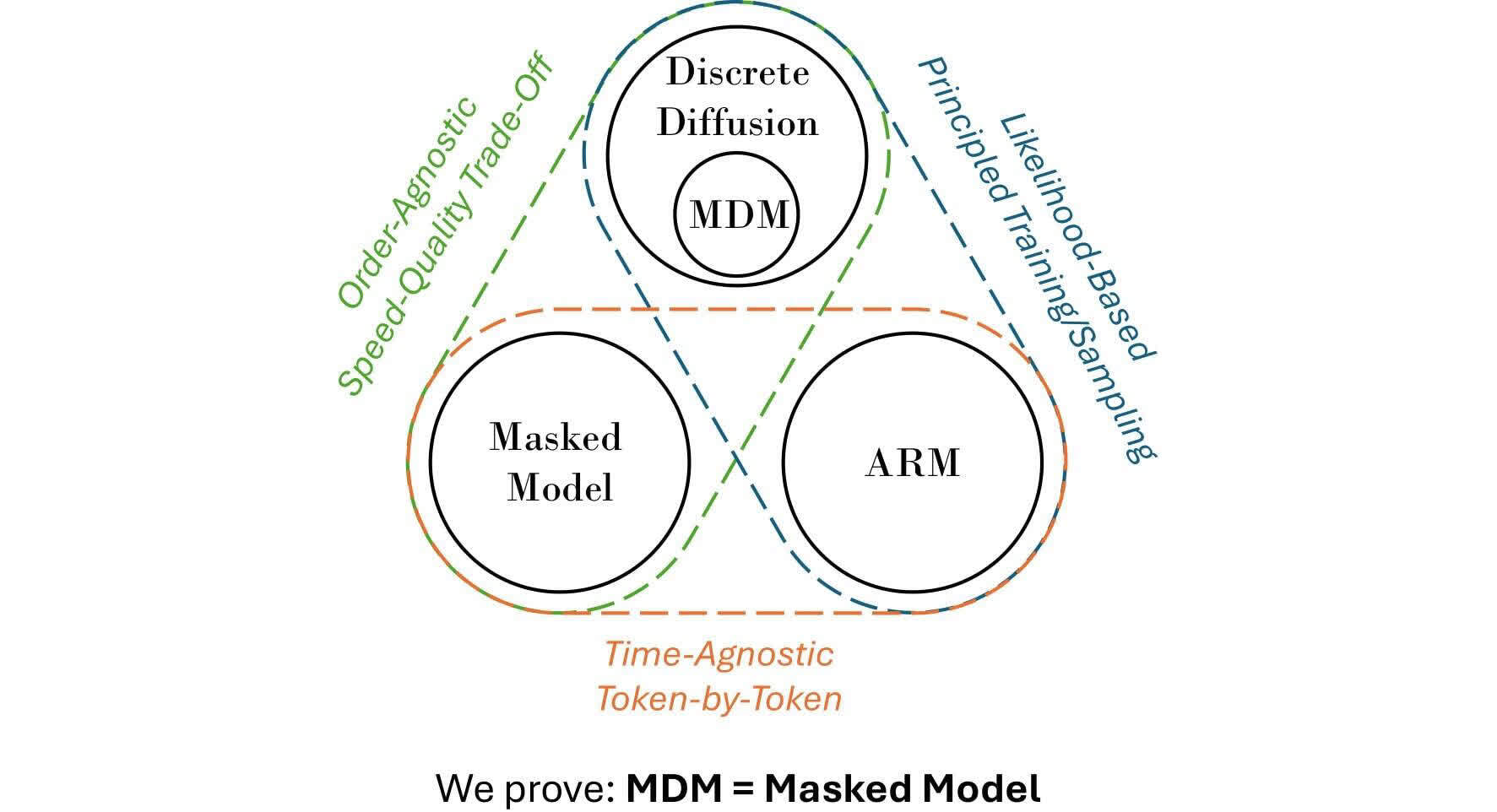

- Kaiwen Zhen (Tsinghua) — Discrete Diffusion Models, Direct Discriminative Optimization

- Jiaxiang Tang (PKU, now at Nvidia) — EdgeRunner

- Ali Hassani (GaTech, now at Nvidia) — Sparse Attention for Video Model